ATLAS Tier-1 Research

TRIUMF provides one of ten international Tier-1 data intensive computing centres for the ATLAS detector’s distributed computing network, part of the globe’s largest and most advanced scientific computing grid.

The Tier-1 Centre is part of TRIUMF’s major collaboration in the ATLAS detector at CERN’s Large Hadron Collider (LHC), including significant contributions to the detector design and construction. The ATLAS detector is best known for its central role in the 2012 discovery of the Higgs boson.

The ATLAS detector generates vast amounts of data, about 10 petabytes per year. This is the equivalent of the data storage of 200,000 blue-ray DVD’s, which if stacked in cases would form a tower 2600 metres tall. The storage, processing and physics modelling of this data is as crucial to particle physics discovery as the ATLAS detector itself.

To manage this huge amount of data, the ATLAS collaboration operates a sophisticated, distributed network of thirteen Tier-1 computer centres and about 100 smaller Tier-2 facilities used primarily for data analysis and simulations. The ATLAS network is part of the Worldwide LHC Computing Grid (WLCG), the global network that stores, distributes and analyses all LHC data.

A founding member of the WLCG, TRIUMF hosts Canada’s only Tier-1 centre. All of ATLAS’ Tier-1 Centres are run by national physics laboratories or other facilities capable of providing the experimental physics culture and infrastructure to support ATLAS’ 24/7 stringent data storage, distribution, reprocessing and security requirements.

Since coming fully online in 2007, TRIUMF’s Tier-1 centre, supported by ten highly qualified TRIUMF staff, has performed with close to 100% up-time, supplying 10% percent of ATLAS’ global computational Tier-1 resources. The exceptional performance has enabled the centre to provide additional capacity during critical times in ATLAS’ science program. This included providing data reprocessing and modelling that enabled the 2012 confirmation of the discovery of the Higgs boson.

Beginning in 2017, TRIUMF’s Tier-1 centre began transitioning to Compute Canada’s new state-of-the-art facility at Simon Fraser University. The move, to be completed by the end of 2018, includes a full upgrade and expansion of all the centre’s compute and storage technologies. TRIUMF personnel will continue to operate the Tier-1 centre, including providing primary scientific services, management and technical support.

Read more about the ATLAS Tier-1 here

Data Science and Quantum Computing

TRIUMF has established a program in Data Science and Quantum Computing in order to enchance it's scientific impact, utilizing it's national network, international collaborations and industry contacts. Several pilot projects, where applications of Machine Learning could have a major impact have been identified to kick start this activity.

Read more about TRIUMF's Data Science and Quantum Computing research topics here.

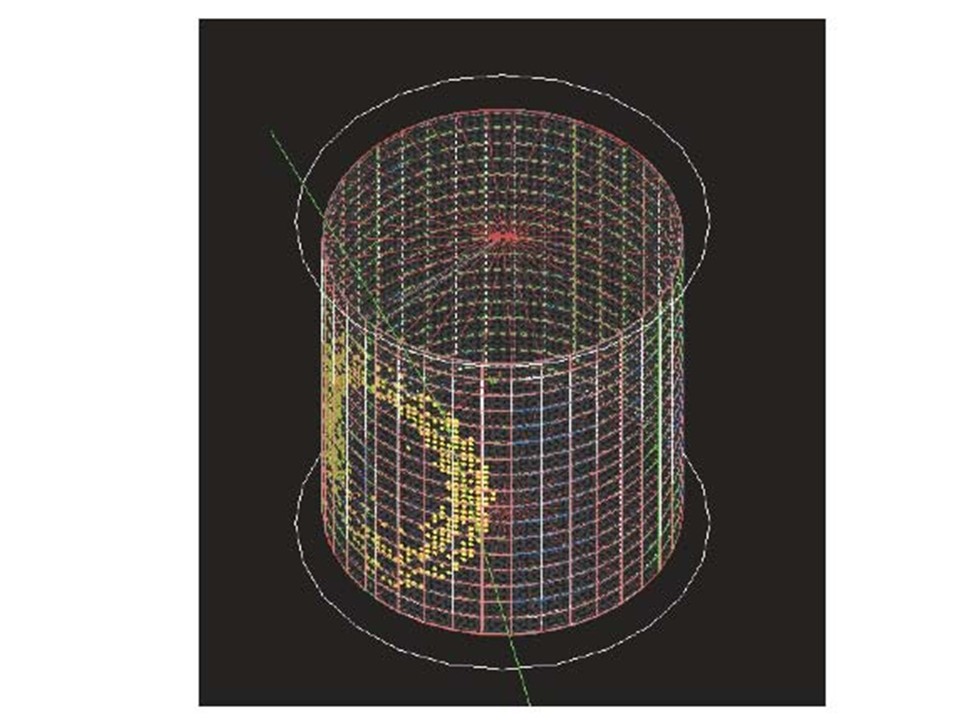

Event Reconstruction in Water Cherenkov Detectors for the Hyper-Kamiokande Project | |

The Hyper-Kamiokande experiment is set to begin operations in the middle of next decade. One of the major science goals of this experiment is to measure the CP violating phase in the neutrino sector. Precise knowledge of this parameter can tell us if neutrinos are responsible for the matter-antimatter asymmetry observed in the Universe. One of the major systematic uncertainties limiting this measurement stems from the unknown rate of neutrino interactions producing gamma backgrounds to the main electron neutrino signal. The goal of this project is to develop deep learning techniques for particle identification and multi-ring event reconstruction in a water Cherenkov detector. It will focus on simulations of Hyper-K detectors including NuPRISM - a major TRIUMF initiative. The project will explore accepted supervised training methods in conjunction with semi-supervised and unsupervised methods such as Variational Autoencoders (VAEs) to enable training directly from data and with limited labeled datasets as well as synthetic data generation. In addition quantum computing and particularly quantum annealing can help us to further train our models from data and to generate synthetic samples - this has broad implications beyond this pilot project itself. |

hyper-K event display Image credit:http://www.hyper-k.org/en/news/news-20170330.html

|

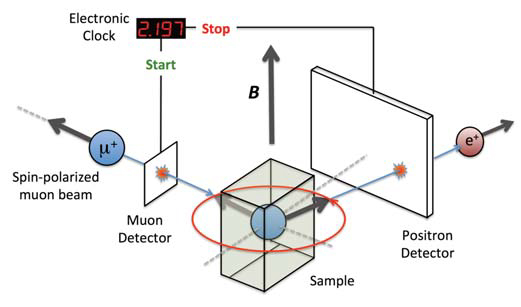

Automatic data analysis for μSR at Center for Molecular & Materials Science (CMMS) | |

At CMMS muon spin relaxation and beta-NMR measurements are carried out in order to experimentally evaluate the spin relaxation phenomena in matter. Currently, the experimentalists empirically find the appropriate fitting function to analyze the data. The goal of this project is to establish an automated analysis system, utilizing statistical and machine learning techniques, to help experimentalists studying their material samples and relate the spectrum features to the appropriate theoretical models. Project will use the accumulated data sets from the last 30 years of CMMS activities. |

μSR diagram Image credit:https://fiveyearplan.triumf.ca/teams-tools/%CE%BCsr/ |

No-Core Shell Model extrapolation using Gaussian Processes | |

The No-Core Shell Model (NCSM) treats nuclei as systems of non-relativistic point-like nucleons interacting through realistic inter-nucleon interactions. The many-body wave function is cast into an expansion over harmonic-oscillator (HO) basis states containing up to Nmax harmonic oscillator excitations above the lowest Pauli-principle-allowed configuration. Such formulation of the problem allows for first-principles calculation of properties of interest such as the nuclear binding energy. In principle the exact result is obtained as Nmax tends to infinity. In practice for the nuclei under consideration the calculation is expected to converge for Nmax of around 20, however world’s largest supercomputers allow for performing calculations with Nmax<16 in practical timescales, hence an effective extrapolation method is needed. Since the properties of the binding energy calculation are known, but not the exact functional form of the binding energy as a function of Nmax, we attempt to extrapolate the binding energy calculation using Gaussian Processes (GP). The generic GP Regression is unlikely to be informative as we lack data over a large region in Nmax, however by imposing constraints on the first and second derivative of the function we hope to obtain an estimate of binding energy with well understood uncertainty at high Nmax.

|

Ab-initio calculation of Helium binding energy Image credit: Physical Review C 97, 034314 (2018) |

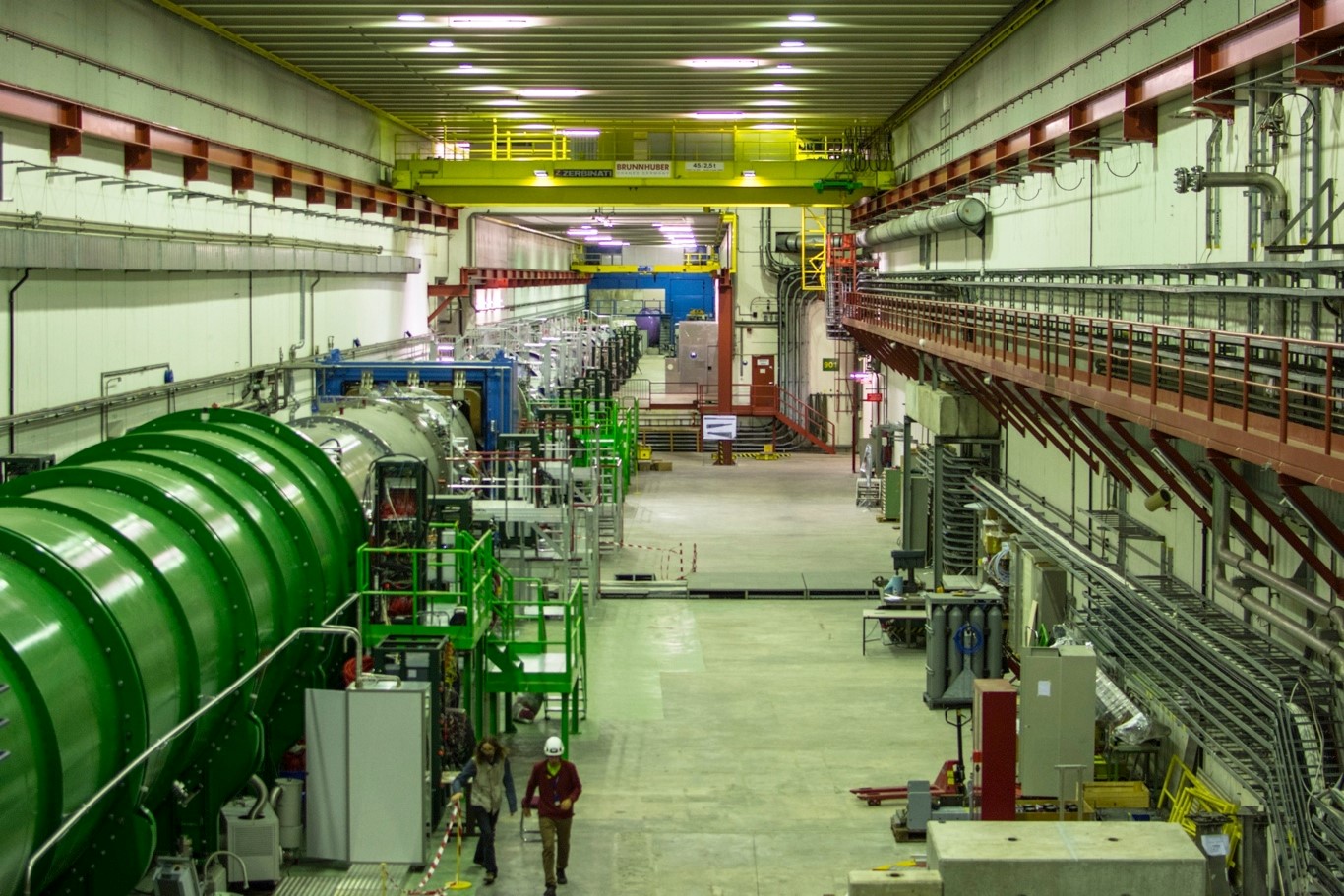

Particle identification in NA62 experiment | |

The NA62 experiment at CERN aims to measure extremely rare particle decays and, through comparison with precise calculations, potentially make discoveries of new fundamental physics processes including new particles and new forces of nature. To achieve this goal NA62 must carefully identify the types of particles (e.g. electrons, muons, and pions) present in the reactions with extremely high accuracy. The goal for suppression of muons and electrons with respect to pions is one in ten billion with efficiency of pion selection of 90%. A method to achieve this is based on using multiple detector arrays including the Liquid Krypton (LKr) calorimeter, muon calorimeters ,and the Ring imaging Cherenkov detector (RICH). The aim of this project is to improve suppression from the LKr and muon systems by an order of magnitude to one in a million ; additional suppression is available from other detector systems. The method will combine 2D image-like data from the LKr calorimeter and the muon calorimeters as well as using other high level event properties. |

Photo of the NA62 detector Image credit: https://na62.web.cern.ch/na62/ |

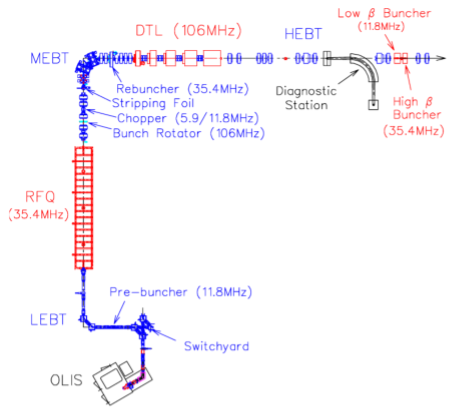

Beam transport tuning and prognostics for TRIUMF accelerator | |

The TRIUMF Isotope Separator and Accelerator (ISAC) I is a post-accelerator delivering isotope beams to various nuclear physics experiments. To deliver beams of desired intensity and quality to the experiments multiple parameters of the ISAC-I accelerator need to be tuned during a lengthy, manual procedure. While the beam transport is in principle inherently tractable problem, in practice describing the accelerator complex in sufficient detail for reliable simulation is a prohibitively time-consuming undertaking. We attempt to design a system for automatized tuning of the accelerator based on historic and on-line data. The accelerator is supported by vast array of devices such as power supplies, turbopumps etc. Failures of these devices cause undesirable stoppages in beam delivery. We aim to develop a tool for suggesting maintenance of devices based on the diagnostic data they produce, utilizing the historical data available.

|

Diagram of ISAC I accelerator Image credit: Olivier Shelbaya |